The SecureMac Interview: Security Researcher Patrick Wardle talks nation-state malware and the future of macOS

CNN once called Patrick Wardle “a sweet guy” who could “hack your Mac in 10 minutes”. But despite his current reputation as a macOS security expert, Wardle told us that his career path wasn’t straightforward:

I kind of had a roundabout way of getting involved in Mac security. I studied at the University of Hawaii—which I always kind of joke about, because it’s not, you know, maybe the most academically revered institution. But I had some really good professors, and most notably I was able to do some internships and work at some really cool places.

First and foremost, I did an internship at NASA, which was really interesting: Working on software for the space shuttles. So that was my foray into the world of software engineering and doing computer stuff, but in a really cool venue.

I also did an internship at the National Security Agency (NSA), which was incredibly intriguing to me—so much so that when I graduated, I began working there. That was a pivotal moment in my cybersecurity career, because that’s really where I learned about advanced hacking, nation-state adversaries, analyzing really in-depth malware. At the time, there weren’t a lot of other organizations or opportunities where you could do that legally.

So I really learned a lot, and it gave me a lot of really good foundational skills. Even today, when I’m analyzing a piece of malware, or breaking down a new security mechanism that Apple has added, or writing a defensive tool, I still have that mindset of what an adversary could or might be doing. And I think that really helps me in a lot of ways.

Nowadays, Patrick Wardle works in the world of Apple and Mac security full-time. Because of the nature of his work, he is well aware of the vulnerabilities and issues with macOS as a platform.

But he worries that many Mac users may have been lulled into a dangerous complacency—one which Apple itself may have unwittingly encouraged. We asked him what message he would want to give to people who use Macs:

One of the biggest risks Mac users run is assuming that because they’re on a Mac, they are immune to threats: immune to viruses, malware, Trojans, implants, etc.

I think that the reason for this mentality is, for one, that for a long time there really wasn’t a lot of Mac malware! All of your Windows friends would be getting hacked and infected, and on your Mac you were happy, you weren’t really exposed to a lot of those threats. Apple’s marketing also did a great job of pushing this narrative.

Adware on macOS is becoming rather prolific; there is an increase in Mac malware targeting cryptocurrency; there’s ransomware.

But now this has become rather problematic, because we have a lot of Mac users who are either somewhat naïve or overconfident from a security point of view. And this can be a problem, because they might be clicking on those phishing emails, downloading apps from shady places on the Internet, running that app from that legitimate-looking email which is actually malware.

So, first and foremost, I think it’s really important to understand that a Mac is a computing system—and thus it’s going to have vulnerabilities.

And as Macs become more prevalent and pervasive across the enterprise and in the end user space, hackers are going to target them more and more. We’re seeing that already: Adware on macOS is becoming rather prolific; there is an increase in Mac malware targeting cryptocurrency; there’s ransomware. There is growth in those areas.

The silver lining in all of this is that the vast majority of Mac threats affecting everyday users are not particularly dangerous—provided that some precautions are taken and best practices followed.

As Wardle explains:

These Mac malware samples are indiscriminately targeting Mac users. So the good news is, if you are a Mac user and you take some basic, standard steps to secure your system, you’re going to be far ahead of the average Mac user. This means things like: Don’t reuse passwords; make sure you’re always running the latest version of macOS; don’t click on random email links; don’t download apps from shady or untrusted websites.

Luckily, at least for the everyday Mac user, the Mac malware that’s going to be targeting them is going to be indiscriminate and rather unsophisticated. So if you perform these best practices, generally speaking you’ll be safe.

On the subject of updates, Apple has recently released its latest version of macOS: Catalina. Catalina has sparked a great deal of discussion in the security community due to some fairly significant security and privacy changes. These changes affect both third-party developers as well as Mac users.

Developers, for example, will no longer be able to use kernel extensions to build their apps. Instead, they will now need to use one of several alternative tools provided by Apple in order for their software to function on macOS. In addition, the app notarization process will now be mandatory for all Mac apps.

End users, too, will notice a difference in Catalina. An enhanced Gatekeeper and stronger data protection mean that macOS users will be notified when apps are attempting to access files and locations on their system, and will be asked to decide whether to allow access or not. This was already a feature of earlier versions of macOS, but Catalina greatly expands this protection—and thus will likely result in Mac users having to deal with a larger number of prompts and alerts.

We asked Wardle for his take on Catalina, and if he thought Apple was moving in the right direction.

From a purely security point of view, we are moving in an excellent direction. Apple’s security team is full of brilliant security researchers, and so they’re continually improving the security of the operating system. This is one of the reasons why I always recommend users update their OS regularly, because you get a lot of built-in, baked-in security mechanisms in each new version of the operating system.

As Apple increases the security of the system, this is going to impact the user experience.

However—and here’s where the challenge comes in—does this impact usability?

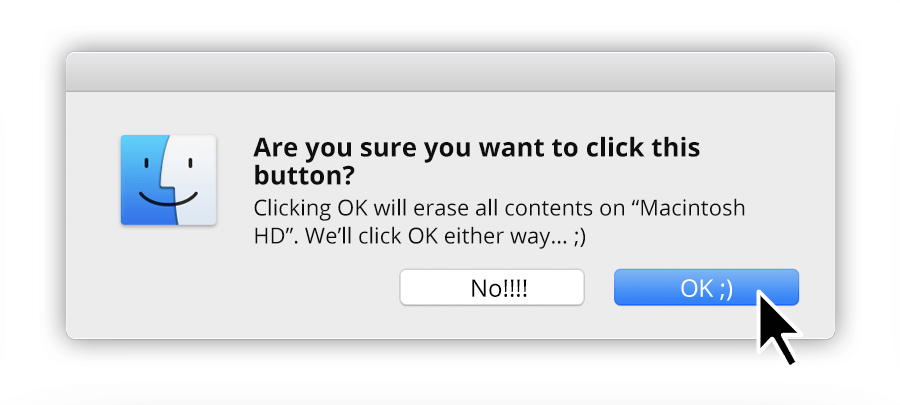

Security and usability are almost on opposite ends of the spectrum. And as Apple increases the security of the system, this is going to impact the user experience. For example, now there are a lot of popups: “Do you want this website to download this app”? “Do you want this app to be able to access the Desktop”?

It’s tough to find that balance. I’m really not critiquing Apple here, because it’s a very difficult problem. But I foresee users kind of just getting annoyed and frustrated—and clicking “yes” through everything. This is a problem that any security tool which displays alerts to its users is going to face. So we’ll see if that gets scaled back in the end.

Another critique is that any time Apple removes a capability that’s used by third-party security tools, this can impact the capabilities and functionality of those tools. That’s another challenge. So for example, yes, removing kernel extensions reduces the attack surface, but it could potentially impact the ability of third-party security tools to do their job. Apple does provide alternative APIs (there’s a new endpoint security framework), but it’s still very challenging for these third-party security companies who now basically have to rewrite their tools to conform to what Apple wants.

Wardle’s remarks echo what many in the dev community have said about Catalina. But Apple seems committed to moving macOS in the direction of iOS, further locking down the operating system—purportedly with the goal of making Mac more secure for users. However, Wardle is quick to point out that Apple’s moves with respect to macOS could, ironically, have negative security ramifications:

You have this perfect case study in the iPhone. The iPhone is an incredibly secure device: The average iPhone user is not going to get infected. But advanced nation states are developing (and already have developed) the ability to remotely infect iPhones. And once they get in, once they’ve hacked and infected one of these devices, because it’s such a locked down device, there’s really no insight into what’s happening on it. You can’t even see a list of what processes are running! So these advanced adversaries, these nation states, know that an iPhone is a very hard target to break into—but once they’re in, they’re in.

If I’m an average user, I have no way of knowing if my iPhone has been hacked.

And every year or so, we see reports where the Chinese are targeting human rights activists with really nice iOS exploits. Israeli groups are developing similar capabilities. So those capabilities and exploits are out there—and they’re generally only uncovered when someone detects that in network traffic or gets a funky link and then goes and manually analyzes the website that’s serving it up. They’re rarely found—if ever, that I’m aware of—after someone says “Oh, I figured out my phone was hacked”. And that’s a little scary considering how much we have on our devices.

Mac, on the other hand, is a softer target, but we have third-party security tools which might provide some insight into what’s happening on the system and allow us to detect these adversaries.

It’s difficult. iOS is a more locked-down platform, but it’s a double-edged sword, because the effect of this is that if I’m an average user, I have no way of knowing if my iPhone has been hacked. Which bothers me! On my Mac, at least, I like to believe that I have the expertise and I can run tools which provide me data and information that can help me see if it has been compromised.

So I hope Apple doesn’t go the route of trying to lock down macOS too much, because unfortunately we’re going to then end up in a scenario where yes, the average user is not going to have to worry about getting adware anymore, which is a good thing, but advanced adversaries and nation states are going to be laughing all the way to the bank because their implants and malware are not going to be detected, since security tools are no longer going to provide that additional insight. It’s something to be aware of as Apple continues to lock down macOS. There’s some downside to locking down something too far.

Intelligence agencies and militaries around the world are continuing to develop their cyberware and surveillance capabilities. Given Wardle’s time at the NSA, and his research work on nation-state malware, we asked him if he could clarify the difference between advanced threats and the kind of garden-variety malware likely to be encountered by everyday users.

I would say there are a few differences.

First and foremost is the targeting—either the targeting mechanism or the nuances of the targeting.

When hackers are writing “everyday” Mac or Windows malware, they’re largely driven by financial gain. This means they’re indiscriminately trying to infect as many users as possible. They’re just sending out large spam campaigns, hacking websites, serving malicious ads, etc. And this is good if you’re an average user, because if you’re following these best security practices you’re going to be fine.

Nation states and Advanced Persistent Threat (APT) groups, on the other hand, have a very specific target set in mind. So they will go after their targets very persistently, and often in a very targeted and sophisticated way.

Your computer isn’t going to start running slowly. There aren’t going to be pop-ups. This malware is essentially going to be invisible.

If you’re targeted by one of these nation states, and if, for example, they want to attack you via email, they’re going to craft an incredibly customized, personal email that looks completely legit. They might hack a website that you, specifically, visit. And that’s very, very difficult to defend against. I don’t want to say there’s nothing you can do, but it’s very difficult because they’re so motivated and well-resourced; so sophisticated and capable.

We also see that the malware samples they deploy are very unique and very targeted. So it’s unlikely that the average third-party antivirus product or even Apple themselves will be able to block or detect that.

This malware is also usually really well-tested—so there won’t be obvious indicators of infection. Your computer isn’t going to start running slowly. There aren’t going to be pop-ups. This malware is essentially going to be invisible.

So these are the main differentiators. Any time I’m looking at a piece of malware, if it’s a nation-state sample, I’m a little more excited and interested, because it’s generally more unique, more elegantly written. It’s not just another run-of-the-mill adware that’s using the same old lame tricks. As security researchers, we kind of almost we get excited when we see these nation-state or more sophisticated samples, because they’re just more elegant—and thus are often more of a challenge to analyze. But also there’s often more insight to be gleaned by analyzing these samples.

Again, this is usually something that the average Mac user doesn’t have to worry about. And if you are a person who has to worry about this, I would say you have bigger problems!

Wardle’s interest in nation-state malware samples goes beyond simply analyzing them. He has also been experimenting with reengineering these samples as a way of learning more about them. He gave an in-depth talk about this subject at the 2019 DEF CON security conference, in which he explained the process and laid out the benefits of repurposing nation-state malware.

In the course of his presentation, Wardle mentioned that security researchers aren’t the only ones “recycling” advanced malware in this way. Nation states themselves will often capture malware created by other countries, and then harness that malware for their own purposes.

Wardle notes that one of the benefits of this practice is the ability to deploy malware that “looks like” the work of another nation’s cyberintelligence group. In this way, nation states can deploy malware knowing that even if they are discovered, their covert activities may well be misattributed to another country.

In light of this, we asked Wardle how security researchers go about attribution—how, for example, they know that a given malware sample is the work Russian intelligence and not the CIA.

That’s the million dollar question! It’s interesting, because it’s very appealing for advanced adversaries to repurpose or recycle another group’s malware.

For one thing, it can allow you to deploy malware in risky situations, for example, when you hack a system and find that another hacking group is already there. If you want presence on that system, you can lay down your own custom, homegrown malware—but the other group might detect it, grab it, and then gain insight into your capabilities. That’s obviously less than ideal! Whereas if you use another country’s malware, then if it does get detected, you’re really not tipping your hand too much to the other hacker group.

But the main benefit is that repurposing malware muddies the attribution picture. And attribution is already a very difficult thing. We’re often forced to say “OK, this is likely the North Koreans because of the IP addresses connecting back” or “the targets that they’re going after align with their national security interests” or “the code characteristics map to these other malware samples”. And so in a way it’s very subjective. It’s very difficult. And a lot of security researchers kind of just shy away.

The other thing is that, at the end of the day, does it really matter that much? If you catch the North Koreans deploying some malware that’s targeting cryptocurrency exchanges, what are you going to do? Call up the North Koreans and tell them to stop? There are clearly some geopolitical gains to be had from knowing who’s doing the hacking—if you know whether it was the Russians or the Chinese who stole all your files. But the end result is still that your files are all stolen, which is the bigger issue!

So yes, attribution is a very difficult thing. Recently, there was research that came out in an NSA and GCHQ joint report which basically said that an APT group associated with the Russians had hacked the Iranian infrastructure and stolen all their tools—and were using their tools and infrastructure to do more hacking. So now you have nation states not only using tools but actually exploiting and repurposing another nation state’s infrastructure! This makes attribution incredibly difficult. But one takeaway was that the GCHQ and the NSA had enough insight to cut through all these false flag operations and still track the attacks back to the real authors. They’re able to do that because they have great insight, great analysts, and access to data that we don’t have.

In a security landscape in which advanced actors are repurposing one another’s malware (and even infrastructure), attribution becomes very difficult for independent researchers. Some government intelligence agencies may have the ability to trace such attacks back to their source, but this raises a difficult question: Should we take their attributions at face value?

It is not impossible to imagine a scenario in which a cyberattack could be used as a casus belli for military action. But the world’s governments have a lamentable history of going to war on the basis of shaky justifications and questionable intelligence. We asked Wardle whether or not the general public has reason to be skeptical of the government’s (or anyone else’s) malware attribution:

This may become a more relevant question as cyberattacks start to have real-world impacts. Stuxnet is the quintessential example. And now anytime there’s an incident at a gas production facility, and things explode, people ask “Was this a cyberattack”? And often it’s not—but it would be naïve to think that’s never going to be a possibility.

If we are ever going to take some action based on this kind of attribution, I think the evidence should be overwhelming.

You could have a scenario where, for example, a Middle Eastern country doesn’t like another Middle Eastern country, and so they perform an attack on a third country to make it look like it was their adversary. And then they sit back and watch those two countries escalate, first their cyberattacks, but then that might spill over into kinetic attacks or even more traditional military warfare.

I don’t think that’s an implausible scenario. So I think when countries are making accusations—especially if it’s something impacting a SCADA system or something that has real-world impacts—if there is attribution, then the onus will be on the accuser to make their case, and the standard of evidence required will need to be very high. Hopefully such attribution is not something that people would blindly accept.

So we’d have to say: “OK, you’re claiming it’s Country X. Why do you think that is? And have you thought about the possibility that Country X’s tools could have been repackaged by Country Y to make it look like it’s Country X”?

I think it’s important for us to talk about this, in the sense that people need to realize that attribution is very difficult, and also that attackers are already repurposing and recycling other countries’ tools and infrastructures and exploits to muddy the attribution picture.

So if we are ever going to take some action based on this kind of attribution, I think the evidence should be overwhelming. Hopefully it never comes to that, but I think it could be a possibility—so we should just be very aware of all of this and proceed carefully.

The threat landscape is clearly becoming more complex, and this is as true for macOS users as anyone else. Apple seems to have recognized this—at least somewhat—and is showing signs of opening up to the security community in a bid to make its platforms more secure.

Cupertino recently announced a long-awaited bug bounty program for Macs as well as an expanded, $1 million bounty program for iOS. They also said that they would be allowing a limited number of security researchers outside of the company to experiment with developer versions of the iPhone so that they could look for ways to make the mobile OS safer.

Wardle sees these developments as significant, both in terms of what it means for researchers like himself, but also for what it signals about where Apple is going as a company:

I’m sometimes critical of Apple’s approach to security, but that’s largely often targeted more at their marketing department or their corporate decisions. I’ve always had a lot of respect for their security team. So now it’s amazing to see a lot of the things that the security teams have been pushing for coming to the forefront. It’s really amazing to see Apple—I think kicking and screaming somewhat, but still—growing up a little bit in terms of their security posture.

It seems like a more emotionally mature approach to security. If you look at what other companies are doing—at what other companies that take security and privacy seriously have done for a while—they’re definitely taking a similar approach. Microsoft is a great example. Their security really suffered for a long time. But they then kind of said, “OK, internally we’re going to really try to improve things…but we’re also going to embrace the external community. We’re going to have a security conference, BlueHat. We’re going to have a bug bounty program: We’re going to pay hackers a lot of money if they find and report bugs.” These are all things which ultimately have a positive impact on the security of the system, which then trickles down and benefits the users of said system.

You know, I have MacBook Pros, and iPhones, and Apple Watch. I love Apple products. I always joke that I’ve “drunk the Apple juice”. So as a passionate Mac user, and as a user who cares about security, it’s really cool to see Apple taking this more proactive approach. And as I said, it’s kind of the more emotionally mature approach, because Apple is basically saying, “Hey, we need some help. We can’t do this ourselves. Security is very hard. We’re going to try to do our best: to audit our own code, and make sure it ships tested. But the reality is that code is going to have bugs. So external security researchers, white hat hackers, help us”.

It’s a very mature thing for Apple to finally do. And as a security researcher, I’m very happy about this, because I’m confident that it will bring more eyes onto the Mac platform, which will ultimately reveal more bugs, which will then get reported to Apple and be fixed.

The other good thing is that Apple is now offering some financial incentives. It’s a really good opportunity for people who find bugs as their job. Before, the only option was, essentially, to sell bugs to exploit brokers. While those will always pay more (obviously there’s some ethical quandary there, and some people are still going to be selling bugs to the highest bidder), I’d like to believe that there’s a large number of people that were previously kind of caught in the middle. They said, “OK, look. This bug took me six months to find, and productize. It was a lot of work. I have bills to pay…” But now that Apple is offering decent payments for this, there is a great alternative which is, ethically, a lot more digestible. Now these bugs can be handed over to Apple, and ultimately get patched. And then it’s really a win-win. Mac users are more secure. Apple’s stoked. And the researchers who spend a lot of time and money finding the bugs are compensated. So there’s really no downside.

So I’m really happy, I feel really good, I’m very optimistic about Apple’s awakening to this more transparent, interactive model of security. That’s something I was really pushing for. I think it’s going to have a lot of really positive impacts on the security of their system—which, again, hugely benefits their end users. So kudos to Apple!

Patrick Wardle is, of course, closely connected to the independent research community—and has done his fair share of bug hunting and exploit development over the course of his career.

He continues to build open-source Mac security tools for Objective-See, and is the organizer of the Mac security conference Objective by the Sea.

We asked Wardle about the future of these ventures, both in the near-term and the years to come:

As for Objective-See, it’s just onwards and upwards. The focus is always to create free, largely open-source security tools for end users—especially end users who are passionate about security. Apple continually improves the security of their system, but still, a lot of times this is done in ways that may leave some loopholes, or in ways that don’t offer users much insight. So my tools really try to provide that insight into what the system is doing and protect users from a variety of attacks.

And as for the Mac security conference we’ve organized, Objective by the Sea, we are going to be holding version 3.0 in March, back in Hawaii. So I’m really excited about that. Objective by the Sea is just something that’s grown into this amazing community event, and we’re blessed to have some of the top speakers in the world—Ian Beer from Google, a lot of really well-known Mac security researchers from antivirus companies and research firms—so that’s just incredible.

But it’s amazing for the attendees too, to have this kind of “boutique” experience where students can approach these top researchers, and ask them questions, and network. To me, that’s the best part of it.

So we’re going to continue to focus on that. We are incredibly thankful to sponsors, for example SecureMac, because conferences are obviously expensive endeavors. We’re also thankful for all our Patreon supporters. That’s why, even if they’re just giving one dollar a month, they can attend the conference without paying the attendance fee. Having these sponsors who support our community efforts is hugely, hugely important. It’s amazing to see that financial support from these very forward-thinking companies, and to see how the conference has grown into this can’t-miss experience.

You know, the research I do, and all the tools I write, I think that’s one way that I can give back to the community. And I love writing software, I love analyzing malware. But the conference is really something that brings a lot of like-minded people together in the same space, always in an incredible location like Hawaii or Monaco. It’s just this amazing environment where people thrive. To see that, and to help foster that, is something I’m super passionate about. So we’re going to continue to do that—hopefully forever.

SecureMac would like to thank Patrick Wardle for taking the time to speak with us—and for all he does for the macOS community. To learn more about Patrick’s work, visit his site at objective-see.com or follow him on Twitter. To learn more about Objective by the Sea, or to become a supporter, visit the conference site or the associated Patreon page.